Strengthening User Experience with Embedding/Vector Based Personalization

Introduction

In the constantly changing world of digital communication, personalization has become essential to the user experience. It's the skill of adjusting services, recommendations, and content to suit each user's requirements, tastes, and habits. Users now interact with digital platforms in a completely new way thanks to this tailored approach, which also gives them a sense of relevance and connection that encourages them to return.

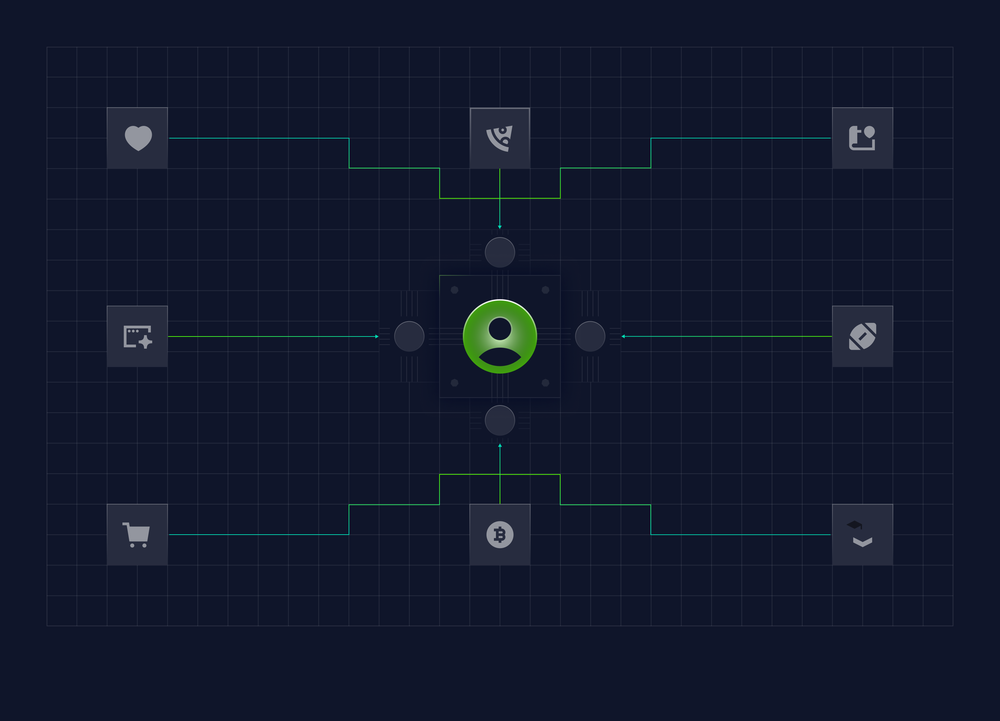

With the introduction of vector-based and embedding personalization, the personalization journey has now reached an exciting new frontier. This method turns enormous amounts of user data into numerical vectors by utilizing the capabilities of machine learning. These vectors, also known as embeddings, capture user preferences and interests in a way that machines can comprehend and respond to with amazing accuracy.

Traditional methods that mainly depended on keyword matching and explicit user input have been replaced with embedding/vector-based customisation. In contrast, algorithms can now recognize and anticipate user preferences with a level of nuance and sophistication that was before unachievable thanks to vector embeddings. This not only improves suggestion accuracy but also creates new avenues for finding material that people connect with more deeply.

It is impossible to overestimate the significance of this method. When it comes to developing intuitive and immersive experiences, vector-based customisation and embedding are at the forefront.

Exploring the Mechanics of Embedding in Personalization

What is the purpose of vector-based embeddings?

The basis of contemporary AI and machine learning applications is vector-based embeddings. In essence, they are multi-dimensional data point representations, frequently created from text, photographs, or other intricate inputs. These AI embeddings serve to give algorithms a means of processing and comprehending the many and different subtleties inherent in human language and behavior.

Vector embeddings are important in the context of personalization. They convert the complex patterns of user interactions into a mathematical space, capturing semantic linkages and similarities. Through this transformation, robots are able to identify tiny links between disparate items, such as products, media, or articles, that share common underlying themes or traits, even when those connections aren't immediately apparent.

These embeddings empower machines to perform complex tasks like recommendation systems with greater efficacy. By understanding the deeper semantic links between user preferences and available content, systems can curate experiences that are highly relevant and engaging, leading to improved user satisfaction and loyalty.

Capabilities of vector search:

Vector search represents a paradigm shift in how we approach data retrieval and analysis. Traditional search methods rely on keyword matching, which can be limiting and often misses the mark when it comes to understanding user intent. Vector search, on the other hand, transforms data into a numeric representation suitable for search scenarios. This numeric form enables algorithms to compare the 'distance' between vectors, effectively measuring how closely related different pieces of content are to a user's query or profile.One of the most significant capabilities of vector search is its ability to find similar data points without exact keyword matches. This is particularly useful in cases where users may not know the exact terms to describe what they're looking for or when their intent is more nuanced than what keywords can capture.

Moreover, vector search plays a key role in applications including multi-modal and semantic search. Rather than only returning syntactically matched results, semantic search uses vector embeddings to interpret the meaning of user queries. By merging information from several modalities—such as text, graphics, and audio—into a single vector space, multi-modal search goes one step further and enables a more comprehensive comprehension of content and user intent.Businesses may develop tailored experiences that engage with people more deeply by utilizing vector-based embeddings and search. This fosters a sense of relevance and connection, which is crucial in today's digital environment.

Vector Based Personalization Strategies

What is a two-tower recommendation model?

The two-tower recommendation model is an innovative framework used in collaborative filtering algorithms to enhance personalization. This model is named for its dual-structure, consisting of two neural networks, each serving as a "tower" within the architecture.The purpose of the two-tower model is to effectively match users with items they are likely to be interested in. It does this by learning from user-item interactions and creating a predictive model that can suggest new items to users based on their past behavior.

How it consists of two neural networks for personalized recommendations:

The two-tower model splits the recommendation process into two parts:

- The User Tower processes user-related data, such as historical interactions, preferences, and demographic information, to create a user embedding.

- The Item Tower processes item-related data, like descriptions, categories, and other metadata, to create an item embedding.These embeddings represent users and items in a high-dimensional space where similar users and items are closer together. The model then calculates the similarity between user and item embeddings to generate personalized recommendations.

What is a two-tower architecture?

The two-tower architecture is the structural design of the two-tower recommendation model. It includes:

- The Query Tower: This neural network processes the user's query, which could be implicit (derived from user behavior) or explicit (a direct search or request).

- The Item Tower: This neural network processes the attributes of the items to be recommended.

How it captures the semantics of query and candidate entities:

The two-tower architecture is adept at capturing the semantics of both queries and candidate entities by translating them into a shared embedding space. This allows the model to understand the context and meaning behind a user's query and the essence of the items in the database.

Benefits of mapping these to a shared embedding space:

There are various advantages of mapping queries and items to a shared embedding space:

It allows the model to directly compare items and queries, irrespective of the original format (text, image, etc.). It makes it easier to comprehend user intent and item relevance, which results in recommendations that are more precise. Scalability is made possible by the model's capacity to effectively manage a high volume of requests and items.

Businesses can apply vector-based personalization tactics that are accurate and scalable by utilizing a two-tower recommendation model. This guarantees that customers receive recommendations and content that are specifically catered to their individual tastes. This strategy plays a key role in developing a highly tailored and intuitive user experience, which increases user satisfaction and engagement.